Multimodal AI represents the future of artificial intelligence, combining vision, hearing, language, and other senses into a unified cognitive experience. This revolutionary technology promises to transform how we interact with machines, making them more intuitive and natural.

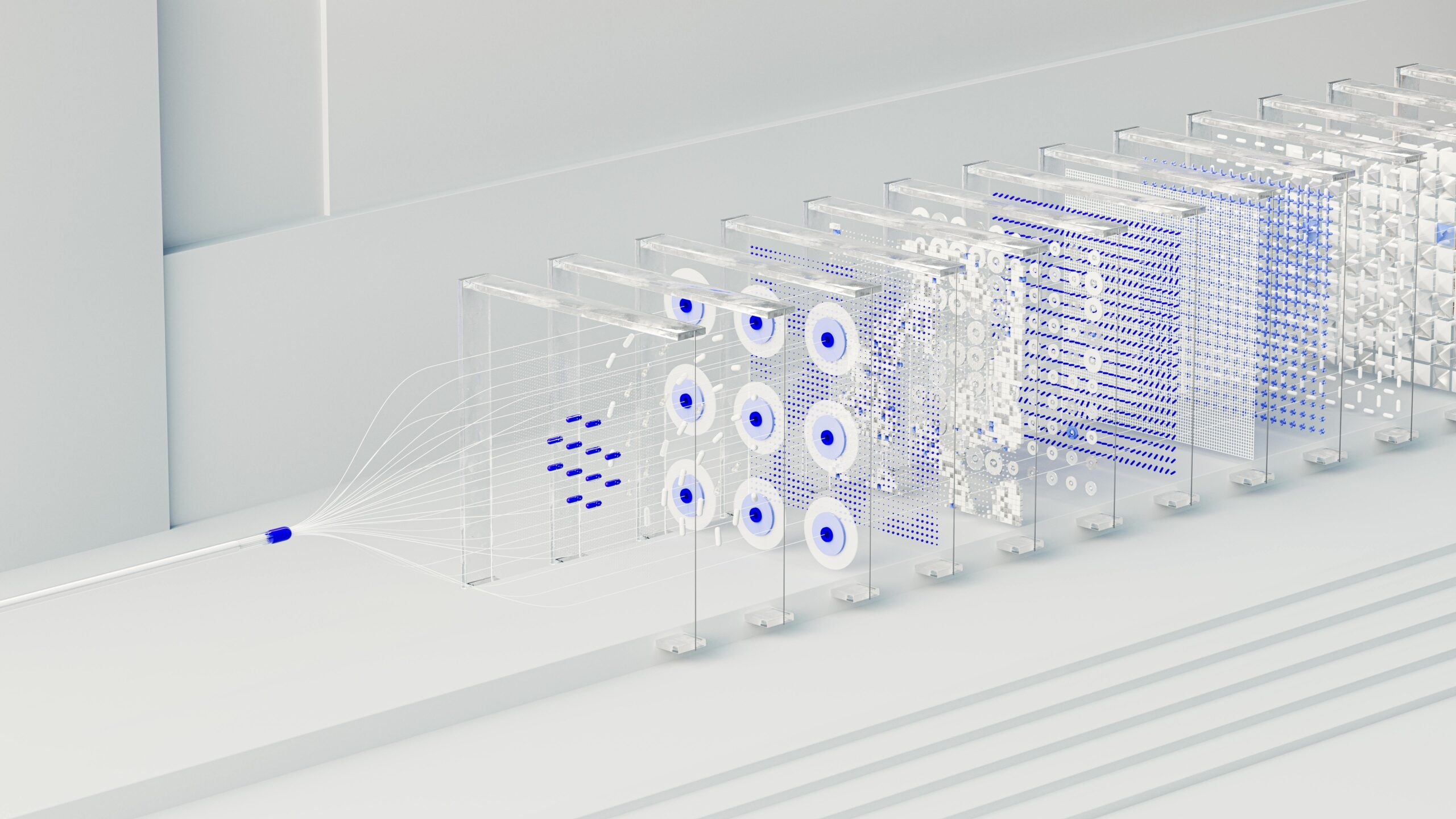

Imagine an artificial intelligence capable of looking at an image, listening to a conversation, and reading text simultaneously, integrating all this information to understand the complete context of a situation. This is the power of multimodal AI, one of the most promising innovations in the field of artificial intelligence.

What Does Multimodal Mean?

Multimodal AI is an approach that enables artificial intelligence systems to process and understand information from different sensory modalities simultaneously. Unlike traditional systems that focus on a single type of input – only text, only images, or only audio – multimodal AI can:

- Analyze images while interpreting textual descriptions

- Understand videos with audio and subtitles simultaneously

- Process gestures, facial expressions, and spoken language together

- Integrate data from different sensors in real-time

Revolutionary Applications

The possibilities offered by multimodal AI are extraordinary and touch numerous sectors of our daily lives. In the medical field, these systems can analyze X-rays, clinical reports, and symptoms described by patients to provide more accurate and complete diagnoses.

In education, multimodal AI can create personalized learning experiences that adapt to how each student prefers to receive information, combining verbal explanations, visual diagrams, and practical examples.

For accessibility, this technology represents a groundbreaking advancement: it can automatically describe images for the visually impaired, transcribe conversations for the hearing impaired, or provide real-time translations that include sign language.

The Challenge of Integrated Understanding

The true power of multimodal AI doesn’t simply lie in processing multiple types of data, but in the ability to create meaningful connections between them. When a system can see that a person is smiling while saying “thank you” with a warm tone, it understands the complete emotional context of the situation.

This integrated understanding opens new frontiers for smarter virtual assistants, more sophisticated security systems, and safer autonomous vehicles that can interpret road signs, weather conditions, and other drivers’ behaviors simultaneously.

Towards a More Natural Future

The evolution towards multimodal AI represents a fundamental step toward creating artificial systems that can interact with us in a more natural and intuitive way. We will no longer have to adapt to technology’s limitations, but technology will adapt to our natural way of perceiving and communicating with the world.

As this technology continues to evolve, we are entering an era where artificial intelligence will not just be a powerful tool, but a cognitive partner that truly understands the world around us with the same sensory richness with which we perceive it.