Explainable AI represents a revolution in artificial intelligence transparency, allowing users to understand how systems make decisions. This technology is essential for building trust and ensuring accountability in the AI era.

In the world of artificial intelligence, one of the most pressing challenges is the so-called “black box” problem – AI systems that make decisions without users being able to understand the underlying reasoning process. Explainable AI (XAI) emerges as the solution to this dilemma, promising to open the black box and make the decision-making mechanisms of artificial intelligence transparent.

What is Explainable AI

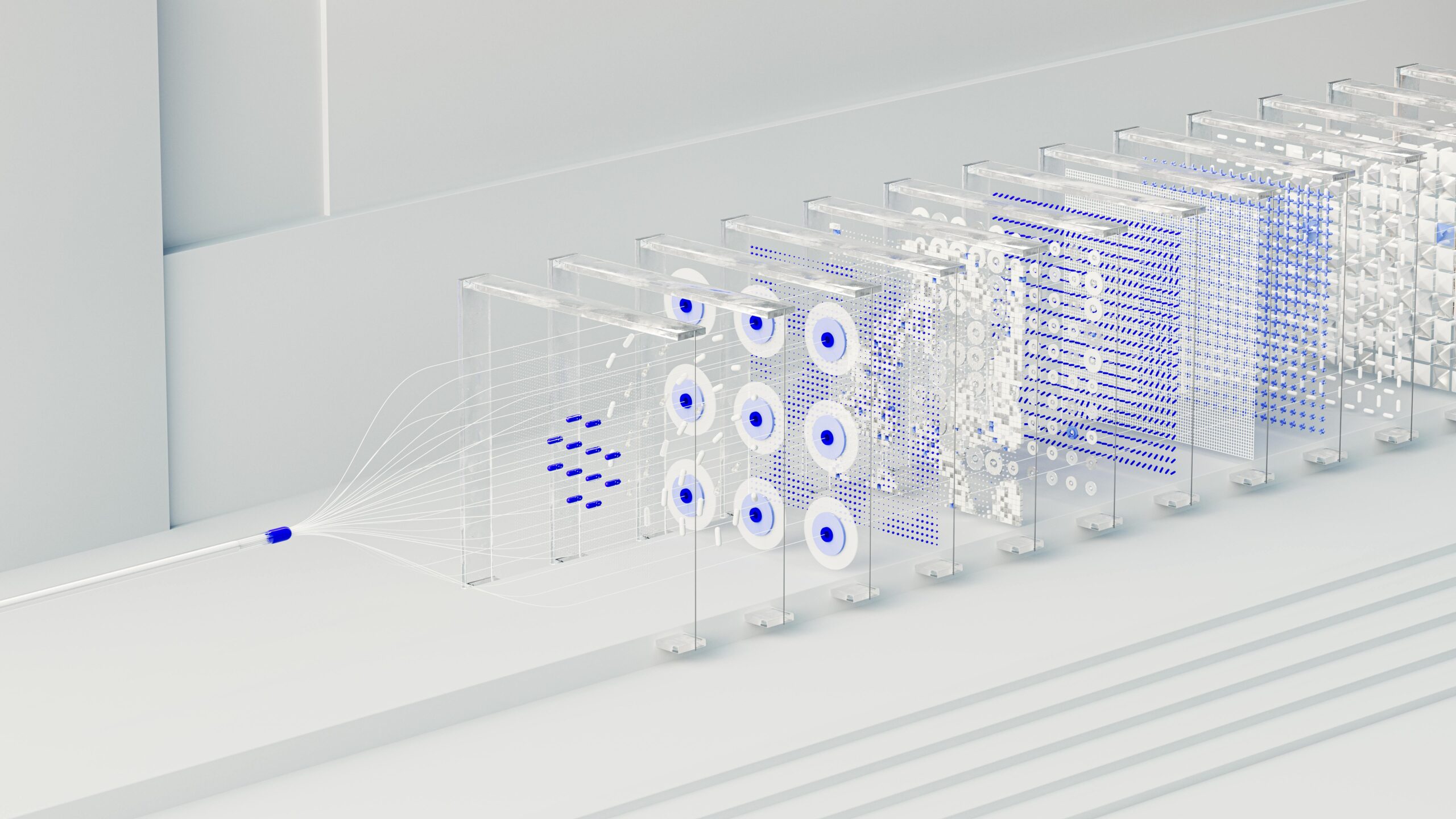

Explainable AI refers to methods and techniques in artificial intelligence that make the results of solutions understandable to humans. Unlike traditional machine learning models that operate as “black boxes,” XAI provides clear explanations of how and why an AI system reached a particular conclusion or recommendation.

This transparency isn’t just an academic concern: it has become a critical necessity in sectors where AI decisions can have significant impacts on people’s lives, such as medicine, finance, justice, and security.

Why Explainable AI is Essential

The need for explainable AI stems from several crucial factors:

- Trust and adoption: Users are more likely to accept and use AI systems when they can understand their operation

- Regulatory compliance: Regulations like Europe’s GDPR require the “right to explanation” for automated decisions

- Bias and fairness: Transparency allows identification and correction of biases in AI models

- Debugging and improvement: Understanding decision processes helps optimize system performance

Explainable AI Techniques and Approaches

Various methodologies exist to make AI more interpretable. LIME (Local Interpretable Model-agnostic Explanations) techniques allow explanation of individual predictions, while SHAP (SHapley Additive exPlanations) quantifies each feature’s contribution to the final result.

Other approaches include attention mechanisms in deep learning models, which show which parts of the input the model focuses on, and interpretable decision trees that clearly visualize the decision path.

Practical Applications

In healthcare, explainable AI allows doctors to understand why a system diagnosed a particular condition, increasing trust in AI recommendations. In finance, banks can explain to customers the reasons behind loan decisions, thus meeting transparency requirements.

In criminal justice, XAI helps ensure that risk assessment systems don’t perpetuate discriminatory biases, providing clear explanations of factors considered in evaluations.

Challenges and Future

Despite progress, explainable AI still faces significant challenges. There’s often a trade-off between accuracy and interpretability: simpler models are easier to explain but may be less precise. Additionally, different stakeholders may require different types of explanations.

The future of explainable AI points toward more sophisticated solutions that maintain high accuracy while providing comprehensible explanations. The goal is to create artificial intelligence that not only performs well but can also justify its actions in a human and accessible way.