Interpretable AI represents a revolution in artificial intelligence, enabling us to understand algorithmic decision-making processes. This transparent technology is redefining trust between humans and machines in critical sectors like healthcare, finance, and justice.

In the increasingly complex landscape of artificial intelligence, a fundamental need emerges: understanding how and why AI systems make specific decisions. Interpretable AI, also known as Explainable AI (XAI), represents the answer to this crucial requirement, offering transparency in a world dominated by increasingly sophisticated algorithms.

What is Interpretable AI?

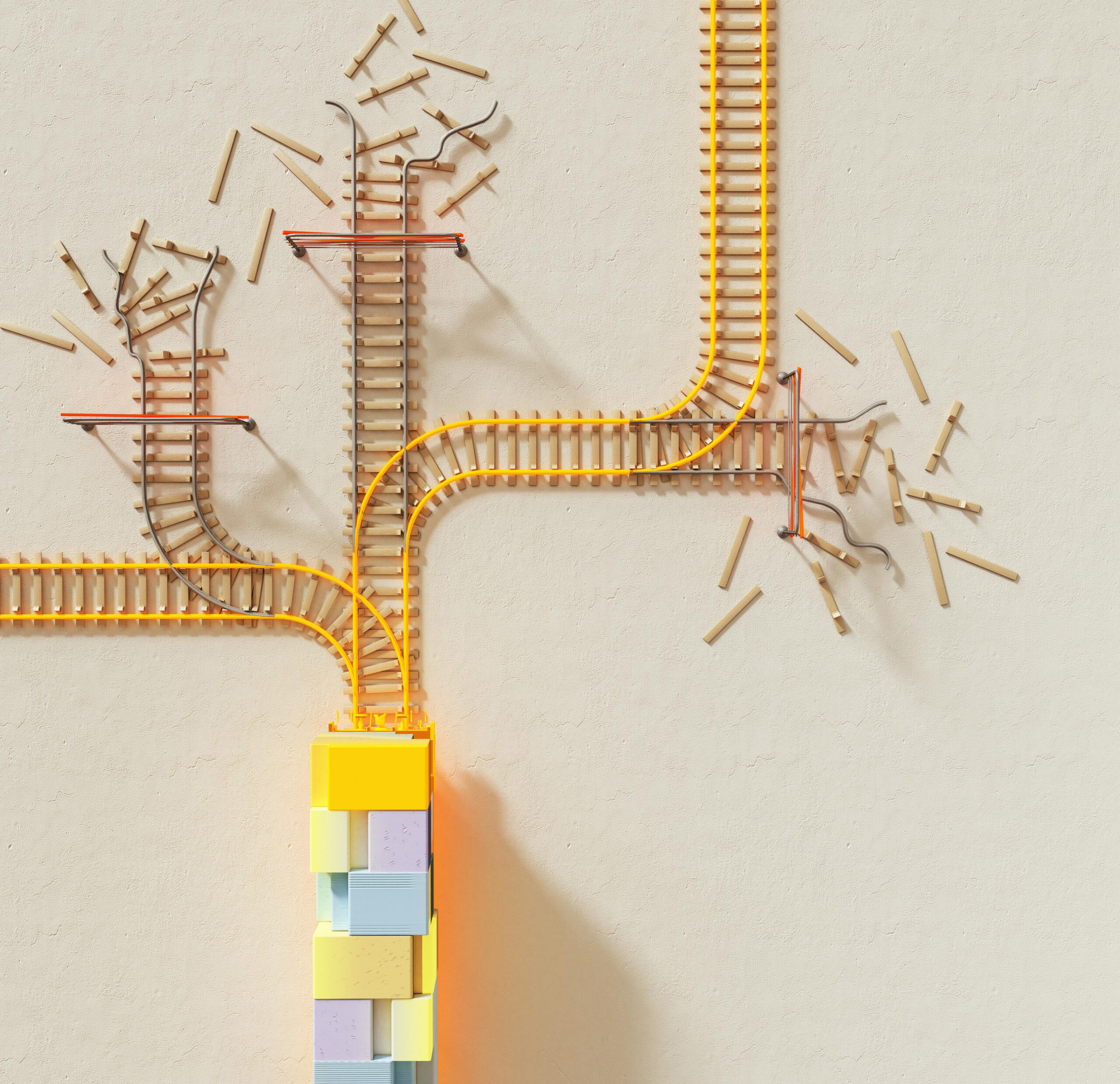

Interpretable artificial intelligence is a branch of AI that focuses on creating models and systems capable of explaining their decisions in terms understandable to humans. Unlike traditional machine learning “black boxes,” these systems offer a transparent window into their decision-making processes.

This technology doesn’t simply provide results but illustrates the reasoning that leads to such conclusions, using visualizations, textual explanations, and intuitive representations that even non-experts can understand.

Revolutionary Applications

The impact of interpretable AI extends across several critical sectors:

- Healthcare: Doctors can understand why an AI system recommends a particular diagnosis or treatment, increasing trust and improving care quality

- Financial sector: Banks can explain to customers the reasons behind loan approval or rejection, ensuring transparency and regulatory compliance

- Justice system: Risk assessment algorithms can provide clear explanations that support legal decisions

- Automotive: Autonomous vehicles can explain their driving decisions, increasing public acceptance

Challenges and Innovations

Developing interpretable AI presents significant challenges. Engineers must balance model accuracy with their interpretability, often facing a complex trade-off. However, recent innovations are demonstrating that both can be achieved.

Techniques like LIME (Local Interpretable Model-agnostic Explanations), SHAP (SHapley Additive exPlanations), and attention mechanisms are revolutionizing how we interact with AI, making systems more transparent and reliable.

The Future of AI Transparency

Interpretable AI is not just a technological trend, but an ethical and legal necessity. With the introduction of regulations like GDPR in Europe and the AI Act, the ability to explain algorithmic decisions is becoming a fundamental requirement.

Looking to the future, we can expect increasingly sophisticated AI systems that not only perform excellently but also communicate their decision-making processes naturally and intuitively, creating a true partnership between human and artificial intelligence based on mutual understanding.