Ethics in artificial intelligence has become a priority for developers and companies. Creating transparent and responsible AI systems requires new frameworks and methodologies to ensure fairness and trust.

As artificial intelligence adoption accelerates across every sector—from recruitment to healthcare, from finance to justice—the question of AI ethics has become an absolute priority. It’s no longer just about creating effective systems, but ensuring they are fair, transparent, and responsible.

The Black Box Challenge

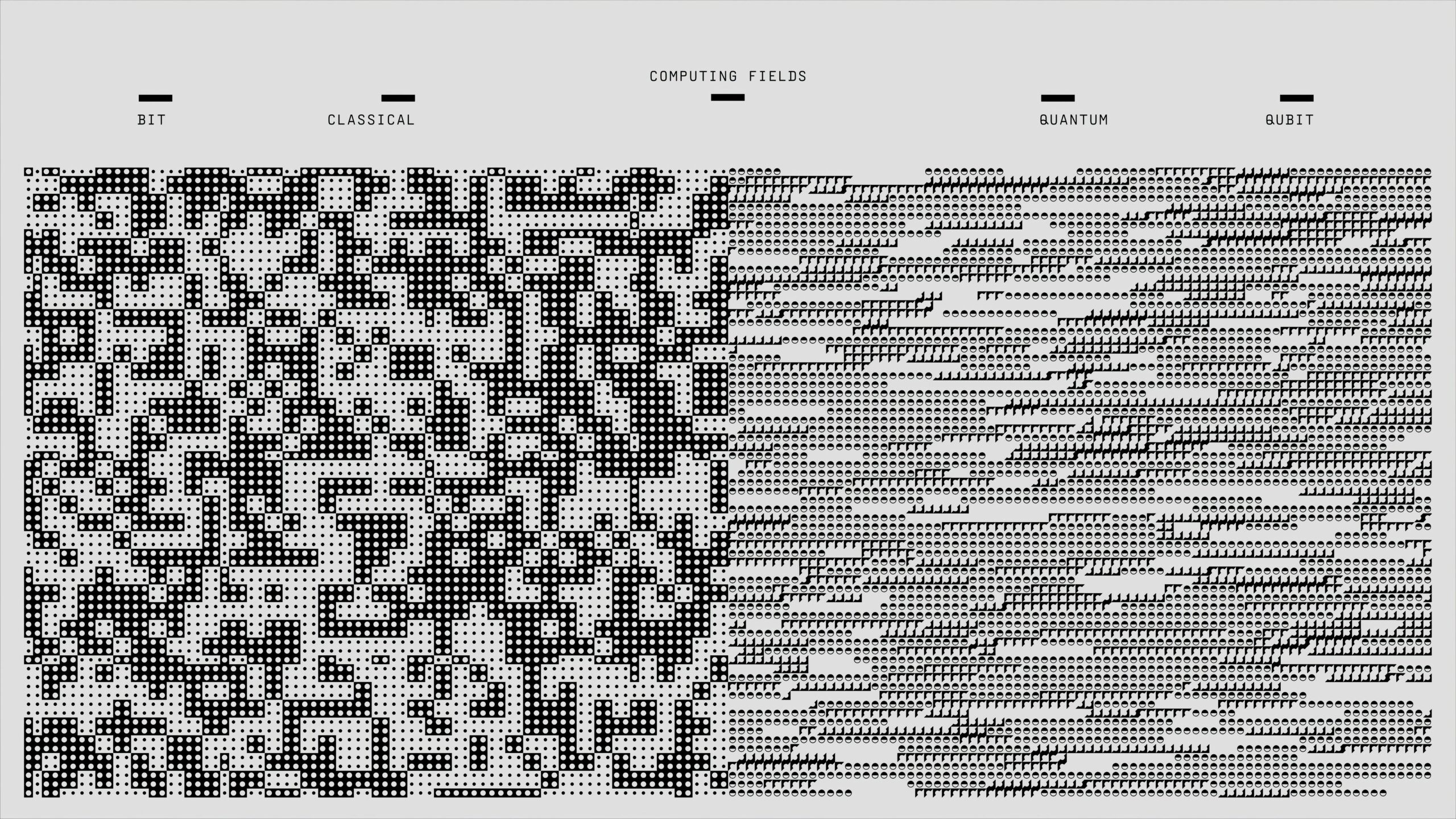

One of the main problems with modern AI is the lack of transparency. Deep learning models, while powerful, often operate as “black boxes” whose internal decision-making processes are difficult to understand. This opacity becomes problematic when AI influences crucial decisions like medical diagnoses or loan approvals.

To address this challenge, new approaches like Explainable AI (XAI) have been developed, aiming to make algorithmic decisions understandable through visualization techniques and analysis of the most influential features in the decision-making process.

Frameworks for Responsible AI

Leading tech companies and international organizations are developing structured ethical frameworks. These include fundamental principles such as:

- Fairness: ensuring AI doesn’t discriminate against specific groups

- Accountability: clearly defining human responsibilities in AI decisions

- Transparency: making decision-making processes understandable

- Privacy: protecting users’ sensitive data

- Robustness: ensuring system reliability and security

Practical Tools for Implementation

The industry is developing concrete tools to implement AI ethics. Bias testing tools allow identification of discrimination in datasets and models. Algorithmic audits are becoming standard practice, while model documentation through “model cards” ensures transparency about system capabilities and limitations.

Additionally, diversity in development teams has proven fundamental: heterogeneous teams are better able to identify potential biases and ethical issues during development.

The Future of Regulation

The European Union has been a pioneer with the AI Act, the first comprehensive legislation on artificial intelligence. This regulation classifies AI systems based on risk and imposes specific requirements for transparency and safety.

The trend toward more ethical and transparent AI isn’t just a moral issue, but also an economic one: user trust is fundamental for widespread adoption of AI technologies. Companies investing in responsible systems today are building tomorrow’s competitive advantage.